It is a long time I do not write post that are a real blog but the trip to Malpelo deserved it.

I decided to take two cameras with me so that I could shoot topside without the pressure of taking the camera outside the housing.

Things to know about Malpelo

Malpelo island is a small rocky island off the coast of Colombia and is not inhabited if we exclude the thousands of birds that live on it and the few soldiers that manage the outpost.

It is situated around 500 km away from mainland and is 1.5 km long for 640 meters wide at the best. The island is volcanic in nature and despite being a Sanctuary of Flora and Fauna has no vegetation on it.

During our stay we had several thunderstorm that resulted in small waterfall from the cracks on the rocks.

There is no mobile phone coverage as you sail off the coast and the only way to get signal is to use a satellite phone.

Malpelo has two weather seasons and dive conditions change considerably:

- January to April – Dry season

- May to December – Wet season

The main difference in addition to the fact that it rains is the water temperature and the depth of the thermocline.

Most people go to Malpelo to see hammerheads and as the weather gets warmer the thermocline goes deeper and so do the hammerheads.

On the other hand visibility improves during the wet season so if you are into video or photo you can get 20+ meters visibility compared to 10-15 in the dry season.

I went in June and this meant schooling hammerheads in the blue and thermocline at depth. I knew about it but other guests didn’t so they were either disappointed or pushing the dive guide out into the blue to see them which frankly was not ideal.

There are only two boats that visit Malpelo regularly the MV Ferox which is what I used and the Sea Wolf. The Ferox caters to more international travellers is entirely made of steel being a former Swedish minesweeper. The Sea Wolf hull is made of aluminum it is more comfortable in terms of living area but suffers the sea conditions much more. The clientele is more local or South American. The two boats have a set number of permits and at one time there is only one boat except on arrival and departure day when you may cross the other one.

The trip starts from the Intercontinental hotel in Cali and you get to Buenaventura after a 3+ hours coach ride in extremely bendy roads with many tunnels. You embark the MV Ferox ribs directly from the touristic port of Buenaventura. Due to the level of crime in this location you stay there the absolute minimum amount of time. The destination was not flagged as safe by my travel insurance as of June 2023.

I shot a little clip of the process of getting on the boat that should give a good idea.

As you can see from the clip the boat is not exactly luxurious. I was in a full size cabin so I could set up my camera inside. All other cabins except the doubles were smaller. There is no dive deck as you dive from the RIBs and the upper deck was absolutely super slippery the moment you had some rain.

Transit to Malpelo

The Ferox leaves after dinner on day 1 and then travels non stop until arrival. Conditions were pretty calm during my crossing and the boat arrived in Malpelo at night.

Once arrived the boat stayed on a mooring point on the north east of the island in front of la cara del fantasma dive site and close to the military outpost.

If you are prone to sea sickness please note that the boat is only attached to a single mooring point and therefore will move with the wind and the waves. In addition the generator providing light is quite noisy and goes on non stop. My earplugs were not good enough for a quiet night, I recommend you invest in heavy duty ones for shooting.

Dive Operation

The dive operation is absolutely superb in terms of logistics and organisation.

The two RIBS travel on the deck of the boat during the crossing and are dropped in using cranes upon arrival. The scuba gear that was set up during the crossing is loaded on the RIB by the crew and stays there for the duration of the trip until unloaded and then the RIB are lifted back on board.

Tanks are filled directly on the RIB, this set up is fantastic as you only have to make it to the boat while your camera gets passed to you. Due to the surface conditions anything different is not advised.

The Diving

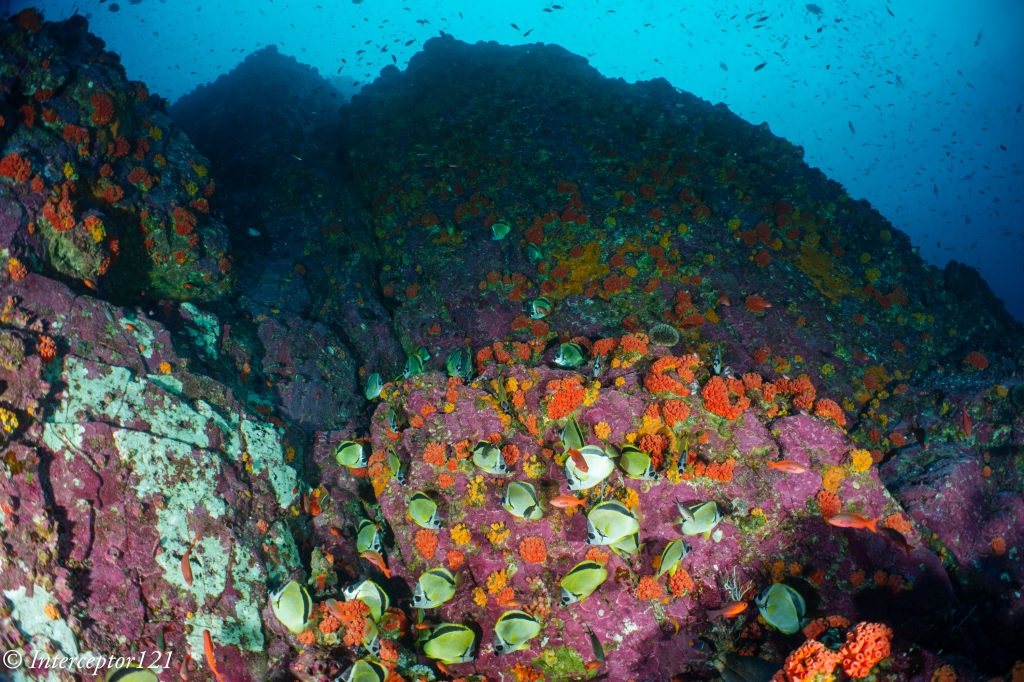

I was expecting absolutely barren rocks with no coral after reading several sources however this proved to be wrong. There are hard and soft corals, not as you would expect in Egypt, but plenty to have macro life which of course was not the main objective of anyone on the trip.

Fish Schools

One of the most attractive features of Malpelo in the wet season are the huge aggregations of fish. When I say huge I mean that the lens I brought for that which is what I use in Egypt for the local school was simply not wide enough.

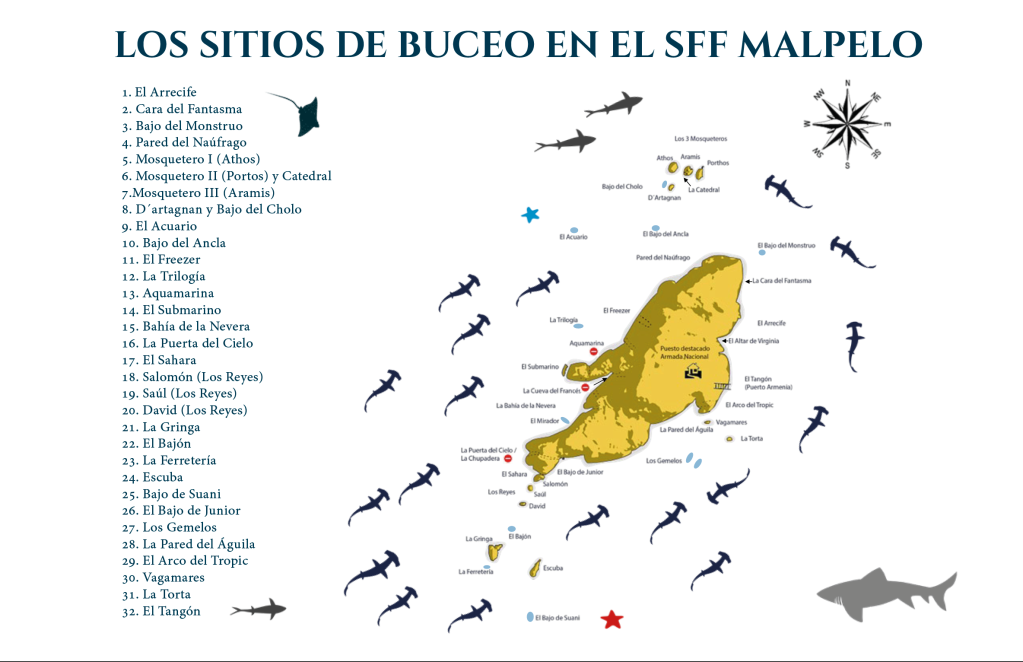

The three mosqueteros, pinnacles to the north of the island were the best for schools.

For the great joy of photographers and videographers.

The school of trevallies there is no doubt the biggest school I have ever seen to date and lends itself to all sorts of wall to wall shots.

My absolute favourite were the snappers though due to the coloration.

In a dive site I even saw two schools of different species in a sort of alliance.

The Giant Moray – Queen of Malpelo

Although people go to Malpelo for sharks a special mention goes to the amount of (free swimming) giant morays. The population is huge and this fish is really a pleasure to watch and capture with your camera.

There are of course other reef fish and some of the least shy hawkfish I have ever seen.

What about Sharks?

And here comes the elephant in the room. Did we see hammerheads? Yes however the largest school was circa 12 and far away in the blue.

Guides discourage using strobes or video lights when those point to the blue (why would you do that anyway?) but it is ok to beam the sharks with light when you shoot against the reef.

La Nevera is probably the best site for hammeheads due to the strategically placed cleaning stations.

However there are other sharks in Malpelo with warmer water.

Firstly Galapagos shars some specimen really large as well and they do come close.

Sharks tend to turn back as they hear your shutter or your strobes makes a ready sound hence the reason some of those look like turning back. I had a Canon 8-15mm with 1.4 TC for those shots so the shark are closer than they look.

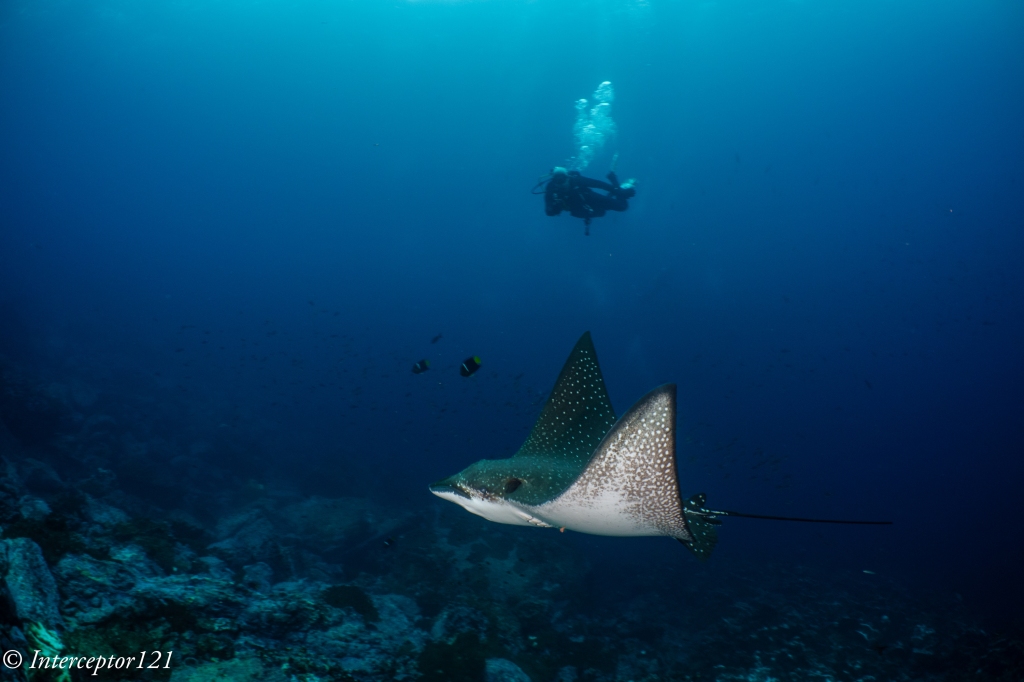

Other subjects that are easier to capture are eagle rays saw up to six together. By no means a large aggregation but quite easy to track.

White tip sharks were quite common as well.

We also saw tiger sharks which is one of the reason we did not see many silky sharks (they can eat them) on the same sites. Humpback whale were seen in the distance and a whale shark came under our rib after a dive at El Monstruo.

Crew

Special mention to the dive guides of the Ferox, I was with a spanish speaking group with Richi Valens who did an awesome job. The other guide Sten is also very well known and long time guide in Malpelo having worked with previous operator departing from Panama.

The whole boat crew were very helpful and the food was light for once: not the usual fatty foods that you experience on some other liveaboards. Beers were $2 per can but a whole bucket was served free after the last dive.

The skippers of the two RIBS are just incredible for the mix of agility, seamanship, strength pulling up BCD with one hand from the tank neck.

Conservation Efforts

While there we met Camilo Abella a park ranger with a degree un biology and excellent English more info on the efforts of the rangers here

A catamaran from Biodiversity Colombia patrols the island at all times to stop ilegal fishing oh sharks. They stay on the boats in 3 months shifts to keep things as they are.

I left a donation when I was there and I hope you will do the same after reading this blog.

Few Other Considerations

I guess many people will be thinking is Malpelo for me or not? And the answer does change a lot depending on your preferences and priorities.

If you need a luxury liveaboard and mind rough surface conditions Malpelo is generally not for you. I would also add that for photographers wanting to take pictures of hammerhead sharks there are places where this is considerably easier. Cocos Island where you can sit on the bottom waiting for sharks is definitely a safer option than Malpelo where contact with the bottom is broadly not allowed, unless with a few fingers if there is ripping current. Even Galapagos despite the reputation has considerably easier diving conditions. To understand how tough it is in Malpelo you need to look at the entry and exit on the tenders. In Galapagos you will still get in and out the tender with your gear in Malpelo that is very risky business. Many dive require short crossings from one pinnacle to another with strong currents and most people are forced to dive with 15 liters tank. If your air consumption is too bad and you are on 12 liters your are told you need to change by the guides to avoid cutting it short for the group. You are also asked to go higher and dive with the bubble of the group if you are running low but this is not a great idea.

In terms of safety you need a surface marker buoy, a flashlight; a beacon to find you in case you are lost is provided. I also carried a knife and a reel which I had to use in one occasion. During the trip I realised that smaller domes are really to be preferred I had a difference of 20 bars air consumption when switching from WWL-1 with its 5.5″ dome to a larger 180mm wide angle port. In addition I got strain on my left arm for carrying my 7 kg video rig in and out the rib and in and out the cabin depite getting help. Super heavy rigs are not ideal for the rollback negative entries required on each site. Going in and waiting for the skipper to pass the camera is not a good idea either as the group could have drifted.

Would I go back? Immediately and next time in the dry season.